A new neural network called DALL-E has been proposed by researchers from OpenAI. The network can create images and photos based only on a text description. To train the neural network, the developers used 12 billion images from the internet that contained a text signature. As a result, algorithms do not search for images in open sources the neural network creates them itself, the OpenAI website.

In July 2020, OpenAI introduced the GPT-3 algorithm, which can create text close to human-written text based on the specified parameters. The new DALL-E is an extension of GPT-3, which analyzes English – language text queries, and then outputs images. The system can generate realistic photos, illustrations, and new combinations of objects. In addition, the neural network is able to place text on the image and perform IQ tests.

The authors of the neural network explained that the name DALL-E is an anagram of the name of the artist Salvador Dali (Dali) and the famous robot from the cartoon WALL -E.

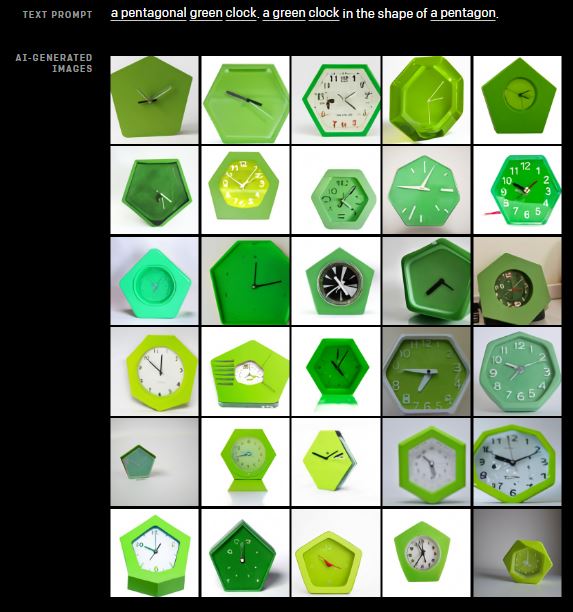

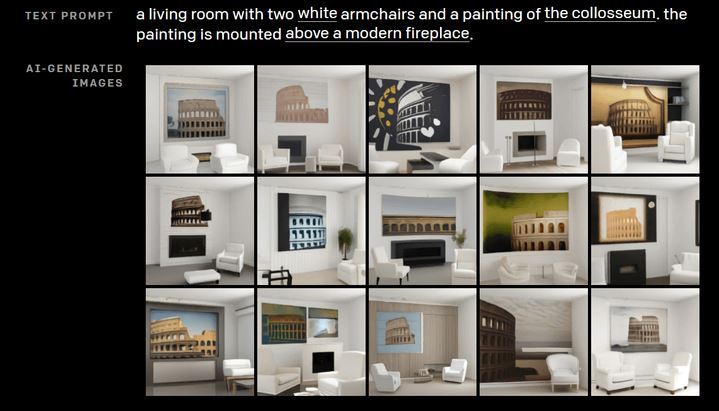

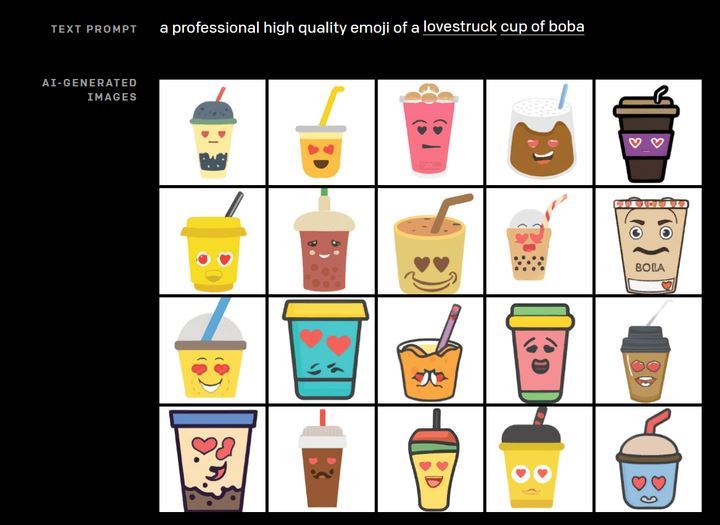

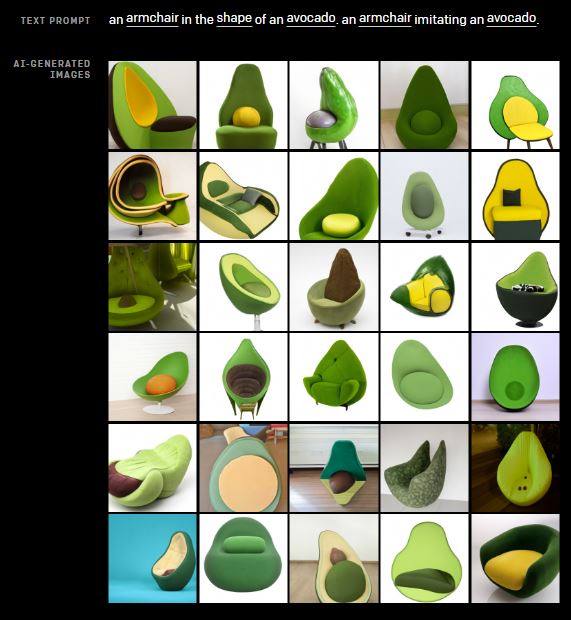

Examples of images created by the Dall-E neural network

1) Drawings by keywords: “pentagonal green clock” / OpenAI photo

2) Drawings by keywords: “living room with two white armchairs and a picture of the Colosseum” / Photo by OpenAI

3) Drawings by keywords: “professional high-quality emoji of a love glass of bubble-Ti” / Photo by OpenAI

4) Drawings by keywords: “avocado-shaped chair” / OpenAI photo

5) Keyword Image: “San Francisco from the street at night”/ OpenAI photo

It is worth noting that the algorithm can not only understand words, but also find connections in phrases. It is noted that the quality of the neural network is significantly affected by the level of complexity of the request. Soon, developers will present a description of how DALL-E works.